毕设的PyTorch学习之旅~~

安装 1 conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

验证程序:

1 2 3 import torch x = torch.rand(5, 3) print(x)

查看GPU驱动和CUDA是否可用

1 2 import torch torch.cuda.is_available()

实战好用的两大法宝 :

dir()函数:能让我们知道工具箱以及工具箱中的分隔区有什么东西

help()函数:能让我们知道每个工具使如何使用的(工具的使用方法)

平台区别:

python文件:以文件全部为块运行

python控制台:以每一行为块运行

jupyter:以任意行为块运行

加载数据 Dataset:提供一种方式去获取数据以及label

Dataloader:为后面的网络提供不同的数据形式

如何获取每一个数据及其label

告诉我们总共有多少个数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 import osfrom PIL import Imageclass MyData (DataSet ): def __init__ (self, root_dir, label_dir ): self.root_dir = root_dir self.label_dir = label_dir self.path = os.path.join(self.root_dir, self,label_dir) self.img_path = os.listdir(self.path) def __getitem__ (self, idx ): img_name = self.img_path[idx] img_item_path = os.path.join(self.root_dir, self,label_dir, img_name) img = Image.open (img_item_path) label = self.label_dir return img, label def __len (self ): retrun len (self.img_path) root_dir = "dataset/train" ants_label_dir = "ant" bees_label_dir = "bee" ants_dataset = MyData(root_dir, ants_label_dir) bees_dataset = MyData(root_dir, bees_label_dir) train_dataset = ants_dataset + bees_dataset len (ants_data) len (bees_dataset) len (train_dataset)

可视化 tensorboard 需要先安装tensorboard

1 conda install tensorboard

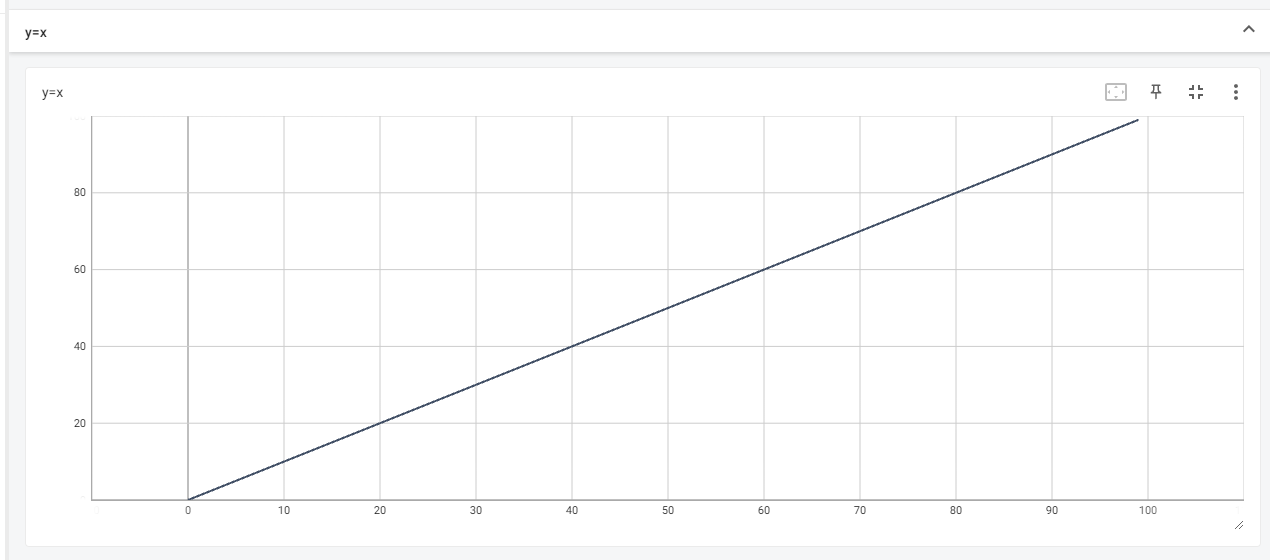

add_scalar:

1 2 3 4 5 6 7 8 9 from torch.utils.tensorboard import SummaryWriterwriter = SummaryWriter("logs" ) for i in range (100 ): writer.add_scalar("y=x" , i, i) writer.close()

生成logs文件夹,其中包含tensorboad的事件文件

打开方式(默认端口6006):

1 tensorboard --logdir-logs -port=6007

add_image:

1 2 3 4 5 6 7 8 9 from torch.utils.tensorboard import SummaryWriterfrom PIL import Imageimport numpy as npwriter = SummaryWriter("logs" ) image_path = "xxx" img_PIL = Image.open (image_path) img_array = np.array(img_PIL) writer.add_images("test" , img_array, 1 , dataformats='HWC' ) writer.close()

1 2 3 4 5 6 7 tag (str): Data identifier img_tensor (torch.Tensor, numpy.ndarray, or string/blobname): Image data global_step (int): Global step value to record walltime (float): Optional override default walltime (time.time()) seconds after epoch of event dataformats (str): Image data format specification of the form CHW, HWC, HW, WH, etc.

核心文件:transforms.py

ToTenserresize

tranfroms该如何使用

1 2 3 4 5 6 7 8 from PIL import imagefrom torchvision import transformsimg_path = "xxx" Image.open (img_path) tensor_trans = transfroms.ToTensor() tensor_img = tensor_trans(img)

为什么我们需要Tensor数据类型

tensor数据类型是神经网络的专用数据类型,包含了神经网络所需的参数

常见的transform用法:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 from PIL import Imagefrom torch.utils.tensorboard import SummaryWriterfrom torchvision import transformswriter = SummaryWriter("logs" ) img = Image.open ("xxx" ) print(img) tensor_totensor = transforms.ToTensor() img_tensor = trans_totensor(img) writer.add_imgae("ToTensor" , img_tensor) trans_norm = transforms.Normalize([0.5 , 0.5 , 0.5 ], [0.5 , 0.5 , 0.5 ]) img_norm = trans_norm(img_tensor) writer.add_imge("Normalize" , img_norm, 1 ) print(img.size) trans_size = transforms.Resize((512 , 512 )) img_resize = trans_size(img) img_size = trans_totensor(img_resize) writer.add_image("Resize" , img_size, 0 ) trans_resize_2 = transforms.Resize(512 ) trans_compose = transforms.Compose([trans_resize_2, trans_totensor]) img_resize_2 = trans_compose(img) writer.add_image("Resize" , img_resize_2, 1 ) trans_random = transforms.RandomCrop(512 ) trans_compose_2 = transforms.Compose([trans_random, trans_totensor]) for i in range (10 ): img_crop = trans_compose_2(img) writer.add_image("RandomCrop" , img_crop, i) writer.close()

datasets 插入数据集

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 import torchvisionfrom torch.utils.tensorboard import SummaryWriterdataset_transform = torchvision.transforms.Compose([ torchvision.transforms.ToTensor ]) train_set = torchvision.datasets.CIFAR10(root="/dataset" , train=True , transform=dataset_transform, download=True ) test_set = torchvision.datasets.CIFAR10(root="/dataset" , train=False , transform=dataset_transform, download=True ) img, target = test_set[0 ] print(img, target) print(test_set.classes[target]) img.show() writer = SummaryWriter("logs" ) for i in range (10 ): img, target = test_set[i] writer.add_image("test_set" , img, i) writer.close()

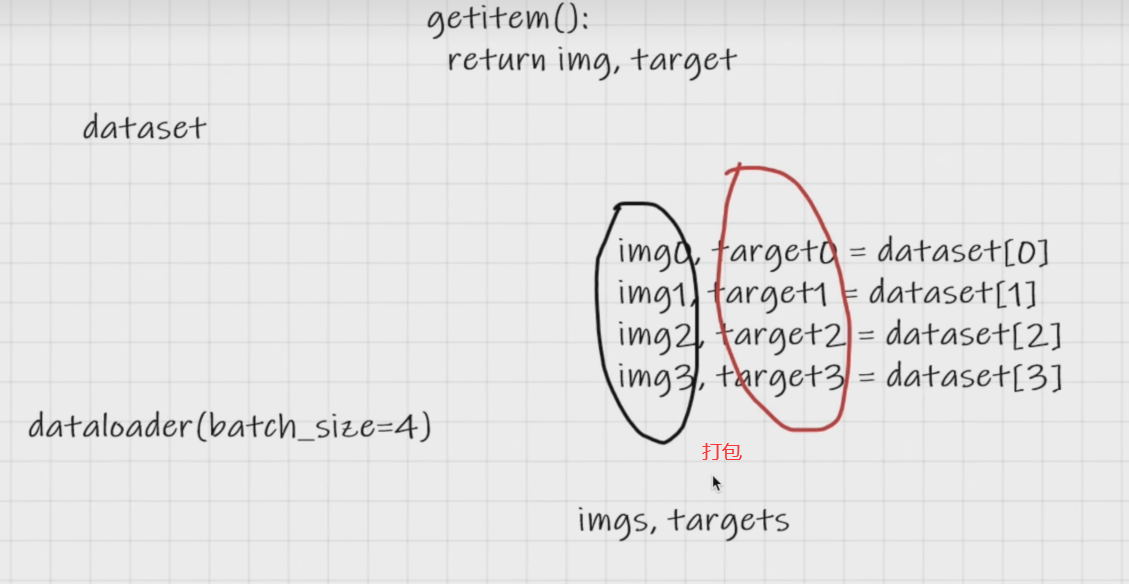

dataloader 从dataset数据集中取数据,加载数据提供给神经网络

讲解:https://pytorch.org/docs/stable/data.html#torch.utils.data.DataLoader

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 import torchvisionfrom torch.utils.data import DataLoadertest_data = torchvision.datasets.CIFAR10(root="/dataset" , train=False , transform=torchvision.transforms.ToTensor(), download=True ) test_loader = DataLoader(dataset=test_data, batch_size=4 , shuffle=True , num_workers=0 , drop_last=False ) img, target = test_data[0 ] print(img.shape) for data in test_loader: imgs, targets = data print(imgs.shape) print(targets)

torch.nn 教程:https://pytorch.org/docs/stable/nn.html#module-torch.nn

实例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 import torchfrom torch import nnclass NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() def forward (self, input ): output = input + 1 return output network = NetWork() x = torch.tensor(1.0 ) print(x) x = network(x) print(x)

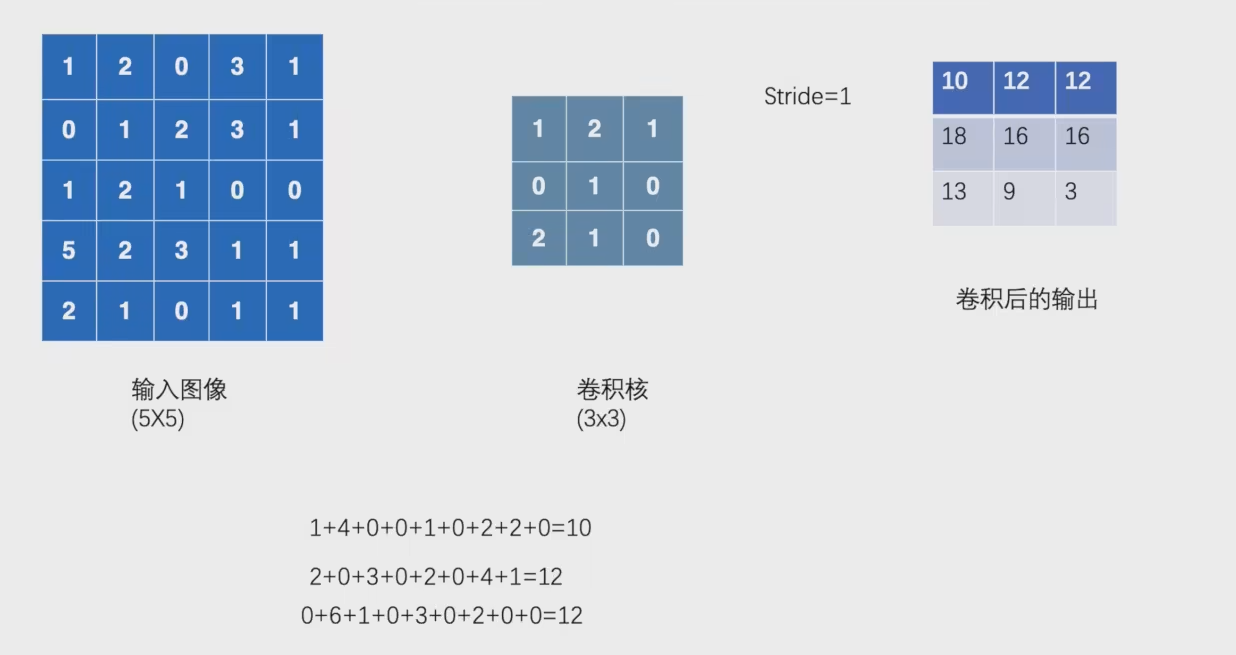

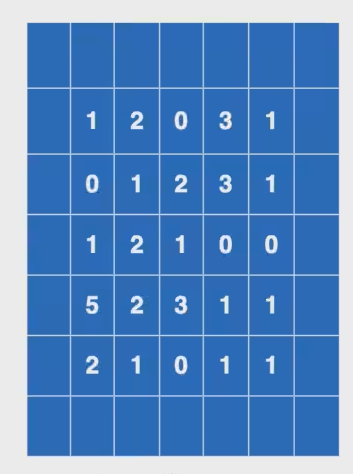

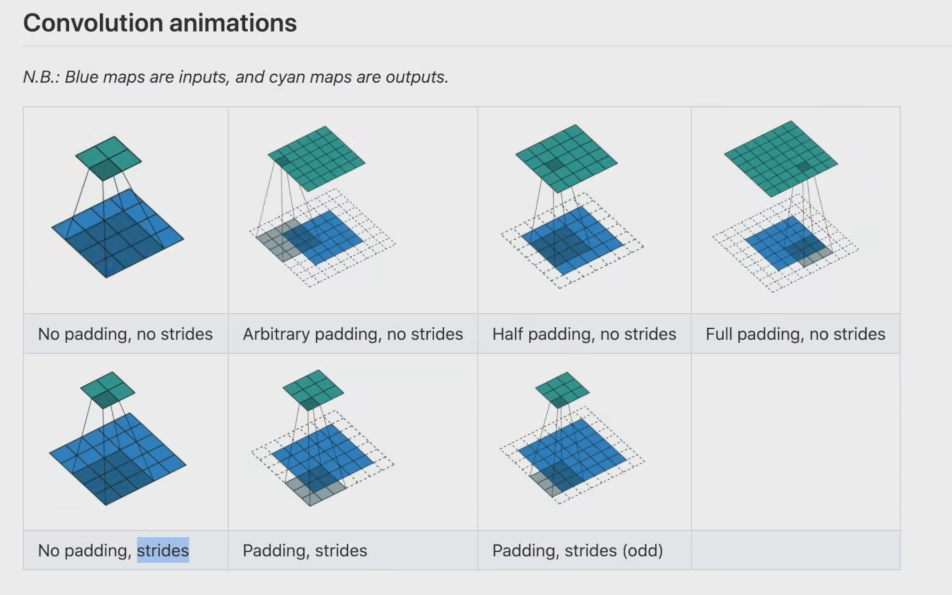

卷积层(Convolution Layers) https://pytorch.org/docs/stable/nn.html#convolution-layers

Stride = 2时得到2 * 2矩阵

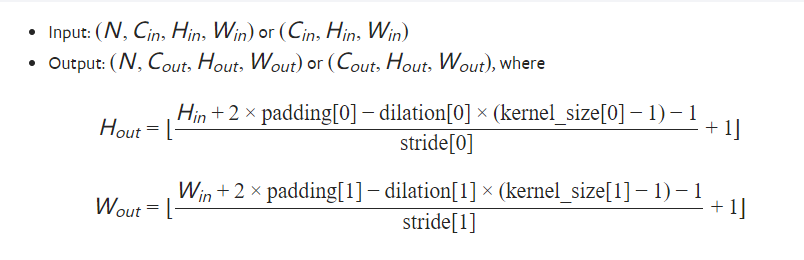

计算公式:

代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 import torchfrom torch import nnimport torch.nn.functional as Finput = torch.tensor([[1 , 2 , 0 , 3 , 1 ], [0 , 1 , 2 , 3 , 1 ], [1 , 2 , 1 , 0 , 0 ], [5 , 2 , 3 , 1 , 1 ], [2 , 1 , 0 , 1 , 1 ]])kernel = torch.tensor([[1 , 2 , 1 ], [0 , 1 , 0 ], [2 , 1 , 0 ]]) print(input .shape) print(kernel.shape) input = torch.reshape(input , (1 , 1 , 5 , 5 )) kernel = torch.reshape(kernel, (1 , 1 , 3 , 3 )) print(input .shape) print(kernel.shape) output = F.conv2d(input , kernel, stride=1 ) print(output) output2 = F.conv2d(input , kernel, stride=2 ) print(output2) output3 = F.conv2d(input , kernel, stride=1 , padding=1 ) print(output3)

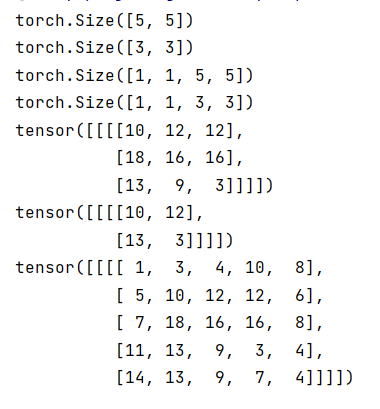

结果:

padding参数的用法:

padding为1:使input四周拓展一格

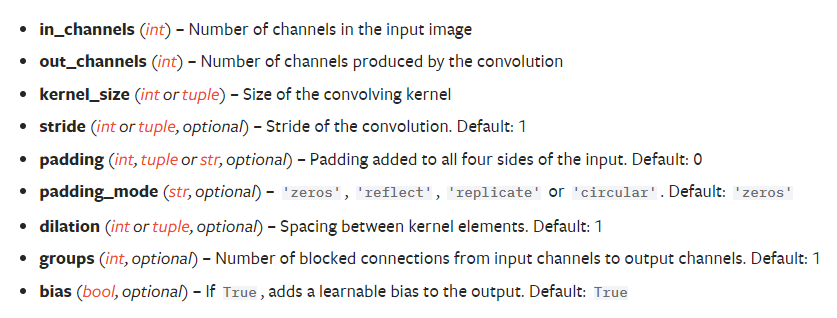

torch.nn.Conv2d(*in_channels*, *out_channels*, *kernel_size*, *stride=1*, *padding=0*, *dilation=1*, *groups=1*, *bias=True*, *padding_mode='zeros'*, *device=None*, *dtype=None*)

代码:

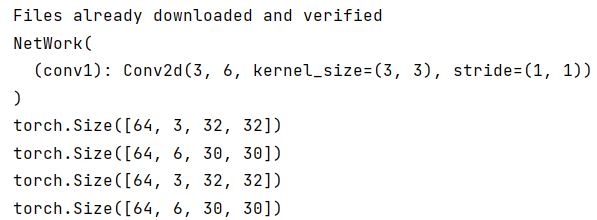

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 import torchfrom torch import nnimport torchvisionfrom torch.nn import Conv2dfrom torch.utils.data import DataLoaderfrom torch.utils.tensorboard import SummaryWriterdataset = torchvision.datasets.CIFAR10("data" , train=False , transform=torchvision.transforms.ToTensor(), download=True ) dataloader = DataLoader(dataset, batch_size=64 ) class NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() self.conv1 = Conv2d(in_channels=3 , out_channels=6 , kernel_size=(3 , 3 ), stride=(1 , 1 ), padding=0 ) def forward (self, x ): x = self.conv1(x) return x net = NetWork() print(net) writer = SummaryWriter("logs" ) step = 0 for data in dataloader: imgs, targets = data output = net(imgs) print(imgs.shape) print(output.shape) writer.add_images("input" , imgs, step) output = torch.reshape(output, (-1 , 3 , 30 , 30 )) print(output.shape) writer.add_images("output" , output, step) step = step + 1 writer.close()

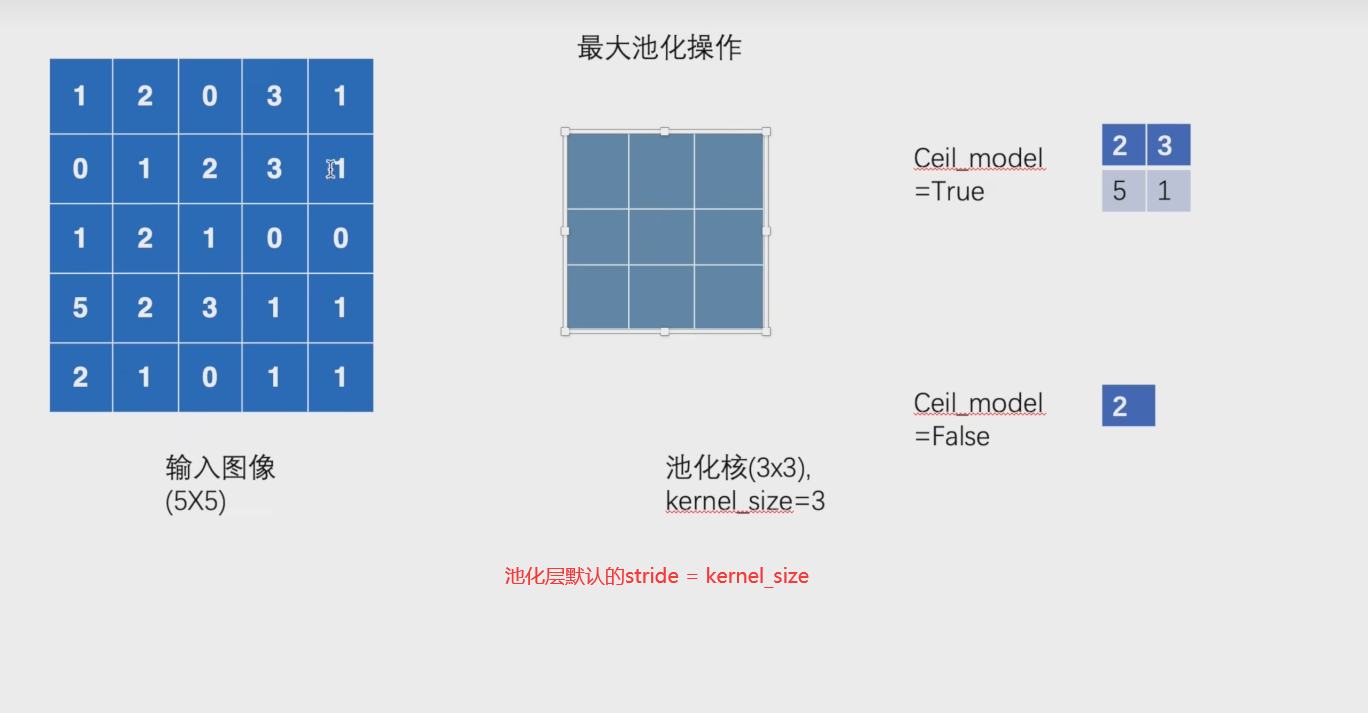

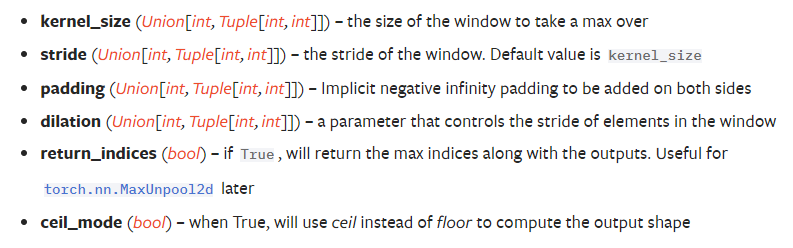

池化层(Pooling Layers) torch.nn.MaxPool2d(*kernel_size*, *stride=None*, *padding=0*, *dilation=1*, *return_indices=False*, *ceil_mode=False*)

池化作用:保留数据特征,减少数据量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 import torchfrom torch import nnimport torchvisionfrom torch.nn import Conv2d, MaxPool2dfrom torch.utils.data import DataLoaderfrom torch.utils.tensorboard import SummaryWriterdataset = torchvision.datasets.CIFAR10("data" , train=False , transform=torchvision.transforms.ToTensor(), download=True ) dataloader = DataLoader(dataset, batch_size=64 ) input = torch.tensor([[1 , 2 , 0 , 3 , 1 ], [0 , 1 , 2 , 3 , 1 ], [1 , 2 , 1 , 0 , 0 ], [5 , 2 , 3 , 1 , 1 ], [2 , 1 , 0 , 1 , 1 ]], dtype=torch.int64)input = torch.reshape(input , (-1 , 1 , 5 , 5 ))class NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() self.maxpoll1 = MaxPool2d(kernel_size=(3 , 3 ), ceil_mode=True ) def forward (self, x ): x = self.maxpoll1(x) return x network = NetWork() output = network(input ) print(output)

图像处理:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 import torchfrom torch import nnimport torchvisionfrom torch.nn import Conv2d, MaxPool2dfrom torch.utils.data import DataLoaderfrom torch.utils.tensorboard import SummaryWriterdataset = torchvision.datasets.CIFAR10("data" , train=False , transform=torchvision.transforms.ToTensor(), download=True ) dataloader = DataLoader(dataset, batch_size=64 ) class NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() self.maxpoll1 = MaxPool2d(kernel_size=(3 , 3 ), ceil_mode=True ) def forward (self, x ): x = self.maxpoll1(x) return x writer = SummaryWriter("logs" ) step = 0 for data in dataloader: imgs, targets = data writer.add_images("input" , imgs, step) output = network(imgs) writer.add_images("output" , output, step) step = step + 1 writer.close()

非线性激活(Non-linear Activations) 导航:https://pytorch.org/docs/stable/nn.html#non-linear-activations-weighted-sum-nonlinearity

relu:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 import torchfrom torch import nnfrom torch.nn import ReLUinput = torch.tensor([[1 , -0.5 ], [-1 , 3 ]])output = torch.reshape(input , (-1 , 1 , 2 , 2 )) print(output.shape) print(output) class NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() self.relu1 = ReLU() def forward (self, x ): x = self.relu1(x) return x net = NetWork() output = net(input ) print(output)

Normalization Layers 加快神经网络的训练速度

参考:https://pytorch.org/docs/stable/nn.html#normalization-layers

典型:

1 2 3 4 5 6 7 8 m = nn.BatchNorm2d(100 ) m = nn.BatchNorm2d(100 , affine=False ) input = torch.randn(20 , 100 , 35 , 45 )output = m(input ) '''nn.BatchNorm2d的参数affine决定了该层是否学习仿射变换的参数。当affine=True时,该层会学习两个可学习的参数:gamma和beta,这两个参数分别用于缩放和偏移归一化后的数据。具体来说,归一化后的数据乘以gamma并加上beta。当affine=False时,gamma和beta被设置为1和0,这意味着归一化后的数据不会被进一步缩放或偏移。'''

Softmax Layers 参考:https://pytorch.org/docs/stable/generated/torch.nn.Softmax2d.html#torch.nn.Softmax2d

与log_softmax的区分:https://blog.csdn.net/qq_43183860/article/details/123929216

recurrent Layers 参考:https://pytorch.org/docs/stable/nn.html#recurrent-layers

RNN:Recurrent Neural Network

LSTM:Long Short-Term Memory

GRU:Gated Recurrent Unit

参考:https://pytorch.org/docs/stable/nn.html#transformer-layers

Linear Layers 参考:https://pytorch.org/docs/stable/nn.html#linear-layers

nn.Linear

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 import torchimport torchvisionfrom torch import nnfrom torch.nn import Linearfrom torch.utils.data import DataLoaderdataset = torchvision.datasets.CIFAR10("data" , train=False , transform=torchvision.transforms.ToTensor(), download=True ) dataloader = DataLoader(dataset, batch_size=64 ) class NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() self.linear1 = Linear(196608 , 10 ) def forward (self, x ): x = self.linear1(x) return x network = NetWork() for data in dataloader: imgs, targets = data print(imgs.shape) output = torch.reshape(imgs, (1 , 1 , 1 , -1 )) print(output.shape) output = network(output) print(output.shape)

Dropout Layers 主要为了防止过拟合(随机变0)

1 2 3 m = nn.Dropout(p=0.2 ) input = torch.randn(20 , 16 )output = m(input )

Sparse Layers 处理稀疏数据 时展现出良好效能,常用于自然语言处理、推荐系统、图像处理等

Embedding(嵌入):将稀疏类别数据转换为密集向量表示

Distance Functions

CosineSimilarity

PairwiseDistance

Loss Functions 损失函数

参考:https://pytorch.org/docs/stable/nn.html#loss-functions

1 2 3 4 5 6 7 8 9 10 11 12 13 import torchfrom torch.nn import L1Lossinputs = torch.tensor([1 , 2 , 3 ], dtype=torch.float32) targets = torch.tensor([1 , 2 , 5 ], dtype=torch.float32) inputs = torch.reshape(inputs, (1 , 1 , 1 , 3 )) targets = torch.reshape(targets, (1 , 1 , 1 , 3 )) loss = L1Loss(reduction='sum' ) result = loss(inputs, targets) print(result)

1 2 3 4 loss_mse = nn.MSELoss() result_mse = loss_mse(inputs, targets) print(result_mse)

1 2 3 loss = nn.CrossEntropyLoss() result = loss(inputs, targets) result.backward()

Optimizer toch.optim

参考:https://pytorch.org/docs/stable/optim.html

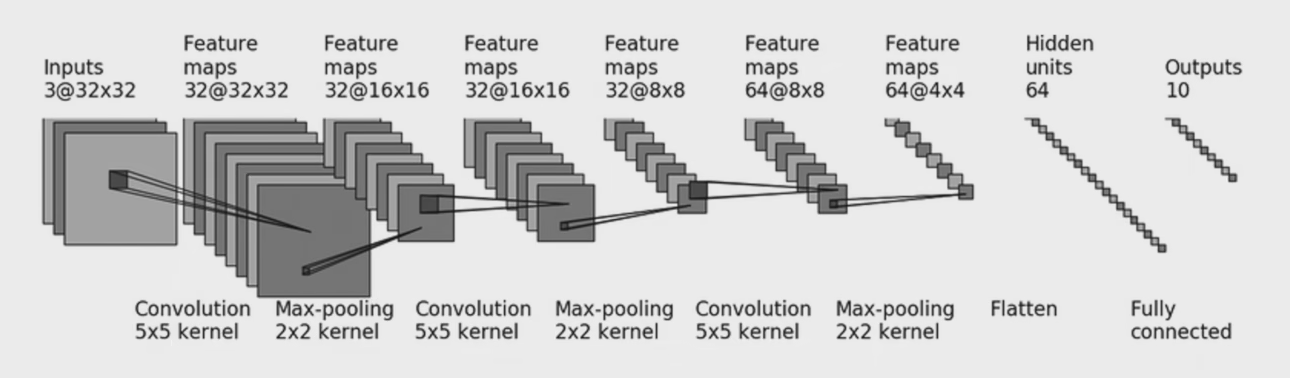

栗子:一个图像识别的实战

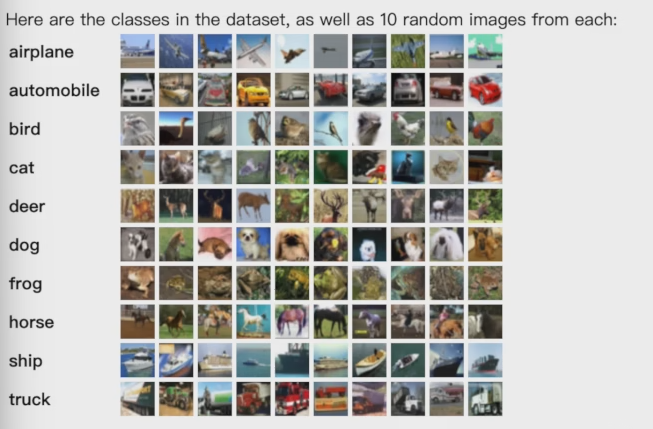

CIFAR-10 -> 根据图片识别为10个类的其中一个

网络结构:

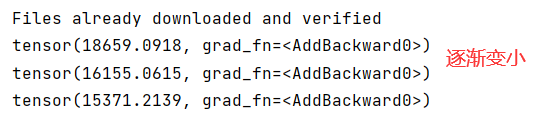

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 import torchimport torchvisionfrom torch import nnfrom torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequentialfrom torch.utils.data import DataLoaderfrom torch.utils.tensorboard import SummaryWriterdataset = torchvision.datasets.CIFAR10("data" , train=False , transform=torchvision.transforms.ToTensor(), download=True ) dataloader = DataLoader(dataset, batch_size=64 ) class NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() self.model1 = Sequential( Conv2d(3 , 32 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Conv2d(32 , 32 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Conv2d(32 , 64 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Flatten(), Linear(1024 , 64 ), Linear(64 , 10 ) ) def forward (self, x ): x = self.model1(x) return x network = NetWork() optimizer = torch.optim.SGD(network.parameters(), lr=0.01 , ) loss = nn.CrossEntropyLoss() for epoch in range (10 ): running_loss = 0.0 for data in dataloader: imgs, targets = data outputs = network(imgs) result_loss = loss(outputs, targets) optimizer.zero_grad() result_loss.backward() optimizer.step() running_loss = running_loss + result_loss print(running_loss)

Epoch和batch_size的区别 :

范围 :Epoch 是对整个数据集的遍历次数,而 Batch Size 是每次权重更新时使用的样本数量。影响 :Epoch 的数量通常会影响模型的训练程度和过拟合的风险。Batch Size 的大小可以影响训练的速度和稳定性,以及模型最终的性能。计算 :一个 epoch 中的批次数量(Number of Batches per Epoch)可以通过将数据集大小除以批处理大小来计算(忽略不能整除的余数)。例如,对于 50000 个样本的数据集和 64 的批处理大小,将有 781 个完整的批次(50000 / 64 = 781.25,取整为 781)。权重更新 :在每个 epoch 中,模型会根据每个批次的梯度进行多次权重更新。一个 epoch 结束时,模型已经根据整个数据集的梯度进行了权重更新。

模型的保存与加载 方式一:保存模型参数和结构

保存:

1 torch.save(network, "CIFAR10_net1.pth" )

加载:

1 2 3 model = torch.load("CIFAR10_net1.pth" ) print(model)

方式二:只保存模型参数

保存:

1 torch.save(network.state_dict(), "CIFAR10_net2.pth" )

加载:

1 2 3 model = NetWork() model.load_state_dict(torch.load("CIFAR10_net2.pth" )) print(model)

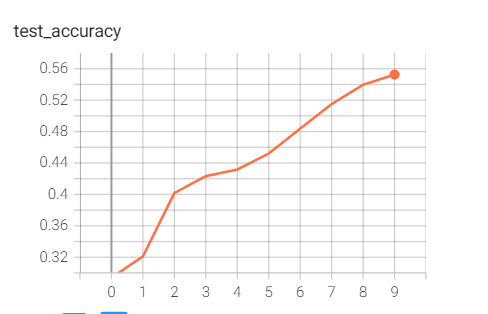

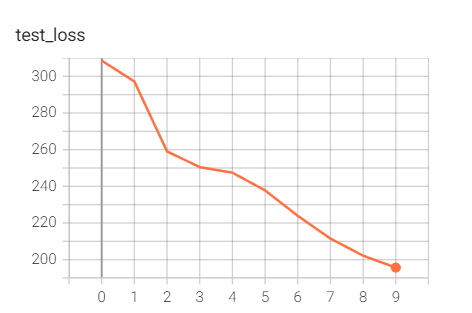

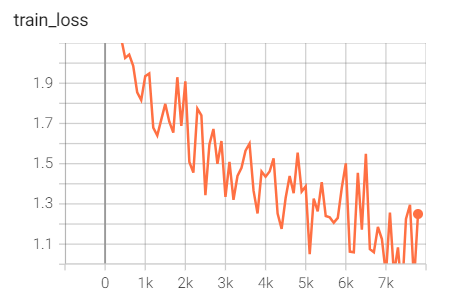

完整的模型训练套路 example.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 import torchvision.datasetsfrom torch.utils.data import DataLoaderfrom torch.utils.tensorboard import SummaryWriterfrom model import *train_data = torchvision.datasets.CIFAR10(root="data" , train=True , transform=torchvision.transforms.ToTensor(), download=True ) test_data = torchvision.datasets.CIFAR10(root="data" , train=False , transform=torchvision.transforms.ToTensor(), download=True ) train_data_size = len (train_data) test_data_size = len (test_data) print("训练数据集的长度为: {}" .format (train_data_size)) print("测试数据集的长度为: {}" .format (test_data_size)) train_dataloader = DataLoader(train_data, batch_size=64 ) test_dataloader = DataLoader(test_data, batch_size=64 ) network = NetWork() loss_fn = nn.CrossEntropyLoss() learning_rate = 1e-2 optimizer = torch.optim.SGD(network.parameters(), lr=learning_rate) total_train_step = 0 total_test_step = 0 epoch = 10 writer = SummaryWriter("logs" ) for i in range (epoch): print("------第 {} 轮训练开始------" .format (i + 1 )) network.train() for data in train_dataloader: imgs, targets = data outputs = network(imgs) loss = loss_fn(outputs, targets) optimizer.zero_grad() loss.backward() optimizer.step() total_train_step = total_train_step + 1 if total_train_step % 100 == 0 : print("训练次数:{}, Loss: {}" .format (total_train_step, loss.item())) writer.add_scalar("train_loss" , loss.item(), total_train_step) network.eval () total_test_loss = 0 total_accuracy = 0 with torch.no_grad(): for data in test_dataloader: imgs, targets = data outputs = network(imgs) loss = loss_fn(outputs, targets) total_test_loss = total_test_loss + loss accuracy = (outputs.argmax(1 ) == targets).sum () total_accuracy = total_accuracy + accuracy print("整体测试集上的loss: {}" .format (total_test_loss)) print("整体测试集上的正确率: {}" .format (total_accuracy / test_data_size)) writer.add_scalar("test_loss" , total_test_loss, i) writer.add_scalar("test_accuracy" , total_accuracy / test_data_size, i) torch.save(network, "network_{}.pth" .format (i)) print("模型已保存" ) writer.close()

model.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 import torchfrom torch import nnfrom torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linearclass NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() self.model1 = Sequential( Conv2d(3 , 32 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Conv2d(32 , 32 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Conv2d(32 , 64 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Flatten(), Linear(1024 , 64 ), Linear(64 , 10 ) ) def forward (self, x ): x = self.model1(x) return x network = NetWork() input = torch.ones((64 , 3 , 32 , 32 ))output = network(input ) print(output.shape)

结果展示:

使用GPU训练 .cuda

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 import timeimport torchimport torchvision.datasetsfrom torch.utils.data import DataLoaderfrom torch.utils.tensorboard import SummaryWriterfrom torch import nnfrom torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linearclass NetWork (nn.Module ): def __init__ (self ): super (NetWork, self).__init__() self.model1 = Sequential( Conv2d(3 , 32 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Conv2d(32 , 32 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Conv2d(32 , 64 , (5 , 5 ), padding=2 ), MaxPool2d(2 ), Flatten(), Linear(1024 , 64 ), Linear(64 , 10 ) ) def forward (self, x ): x = self.model1(x) return x train_data = torchvision.datasets.CIFAR10(root="data" , train=True , transform=torchvision.transforms.ToTensor(), download=True ) test_data = torchvision.datasets.CIFAR10(root="data" , train=False , transform=torchvision.transforms.ToTensor(), download=True ) train_data_size = len (train_data) test_data_size = len (test_data) print("训练数据集的长度为: {}" .format (train_data_size)) print("测试数据集的长度为: {}" .format (test_data_size)) train_dataloader = DataLoader(train_data, batch_size=64 ) test_dataloader = DataLoader(test_data, batch_size=64 ) network = NetWork() network = network.cuda() loss_fn = nn.CrossEntropyLoss() loss_fn = loss_fn.cuda() learning_rate = 1e-2 optimizer = torch.optim.SGD(network.parameters(), lr=learning_rate) total_train_step = 0 total_test_step = 0 epoch = 10 writer = SummaryWriter("logs" ) start_time = time.time() for i in range (epoch): print("------第 {} 轮训练开始------" .format (i + 1 )) network.train() for data in train_dataloader: imgs, targets = data imgs = imgs.cuda() targets = targets.cuda() outputs = network(imgs) loss = loss_fn(outputs, targets) optimizer.zero_grad() loss.backward() optimizer.step() total_train_step = total_train_step + 1 if total_train_step % 100 == 0 : end_time = time.time() print(end_time - start_time) print("训练次数:{}, Loss: {}" .format (total_train_step, loss.item())) writer.add_scalar("train_loss" , loss.item(), total_train_step) network.eval () total_test_loss = 0 total_accuracy = 0 with torch.no_grad(): for data in test_dataloader: imgs, targets = data imgs = imgs.cuda() targets = targets.cuda() outputs = network(imgs) loss = loss_fn(outputs, targets) total_test_loss = total_test_loss + loss accuracy = (outputs.argmax(1 ) == targets).sum () total_accuracy = total_accuracy + accuracy print("整体测试集上的loss: {}" .format (total_test_loss)) print("整体测试集上的正确率: {}" .format (total_accuracy / test_data_size)) writer.add_scalar("test_loss" , total_test_loss, i) writer.add_scalar("test_accuracy" , total_accuracy / test_data_size, i) torch.save(network, "network_{}.pth" .format (i)) print("模型已保存" ) writer.close()

方式二to:

1 2 3 4 5 6 7 8 9 10 device = torch.device("cpu" ) device = torch.device("cuda" if torch.cuda.is_available() else "cpu" ) network.to(device) loss_fn.to(device) imgs = imgs.to(device) targets = targets.to(device)

白嫖google colab:

https://colab.research.google.com/

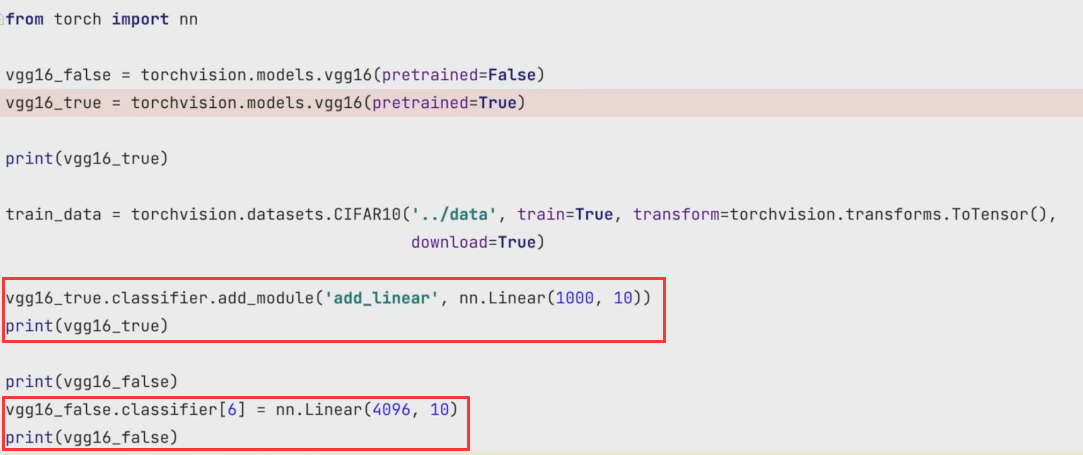

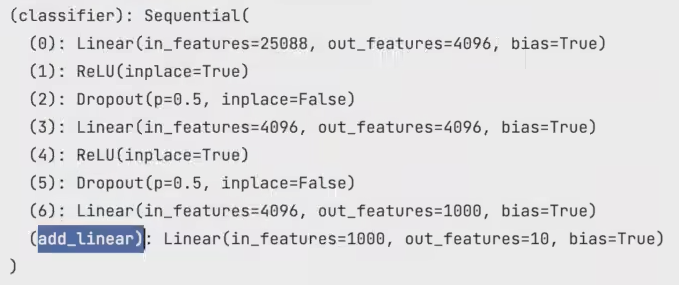

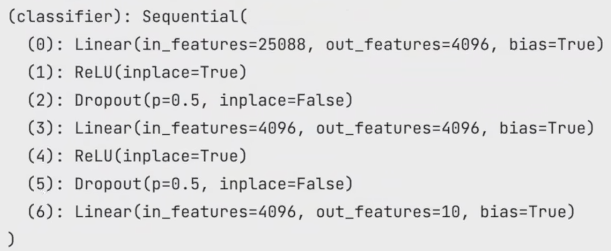

迁移学习 在已有模型的基础上进行调整

ps: pretrained = True表示该本地模型继承开源模型的参数;False则模型为初始化参数

添加:

修改:

完整的模型验证套路 利用已经训练好的模型,给它提供输入

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 image = Image.open ("xxxx" ) transform = torchvision.transforms.Compose([torchvision.transfroms.Resize((32 , 32 )), torchvision.transforms.ToTensor()]) image = transform(image) print(image.shape) model = network.load("network_0.pth" ) print(model) image = torch.reshape(image, (1 , 3 , 32 , 32 )) model.eval () with torch.no_grad(): output = model(image) print(output) print(output.argmax(1 ))